- Published on

Speed up tree inference 5x with treelite

- Authors

- Name

- Rand Xie

- @Randxie29

Tree models play an important role in the machine learning. In particular, gradient boosting decision trees (GBDT) are widely used in analyzing tabular data, as GBDT provides better out-of-box performance compared to neural network (because GBDT has global feature selection). As a result, optimizing tree inference speed becomes more important when it comes to production environment.

If we look deeper into tree models, we are basically trying to optimize a bunch of if-else branches. For a forest of trees, you can execute different trees in multi-core to get a certain level of speed-ups.

Now, the question is "can we push it even further"? The treelite paper [1] provides a viable path to further speed up the inference of a single tree. I have tested the treelite library in production and it gives speedup to my XGBoost model (3000 trees and 8 max_depth), where the baseline is a naive xgboost model.predict call.

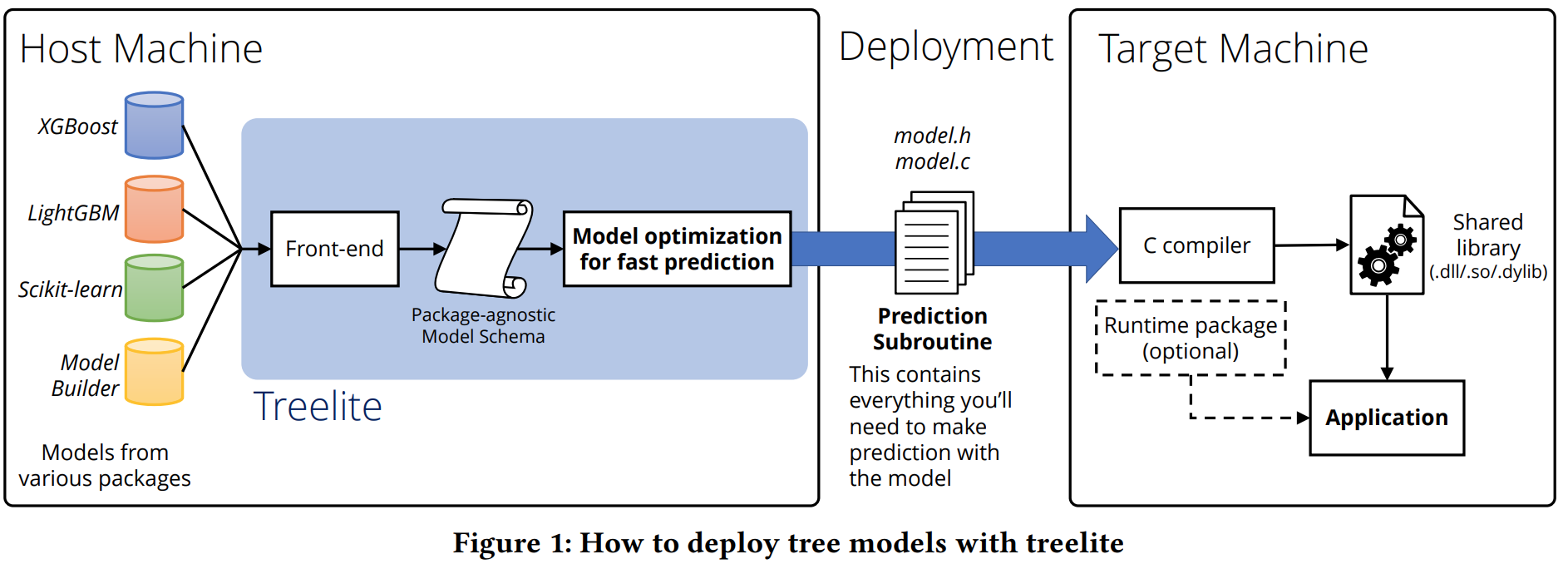

Treelite is able to take tree models from different packages including XGBoost, LightGBM, scikit-learn to an intermediate representation. The intermediate representation filters out irrelevant information so the final saved model is also smaller.

Once the intermediate representation is generated, it allows the compiler to optimize the branch prediction. In particular, the magic is called __builtin_expect that provides hint to the CPU on which branches are more likely to go. This can help CPU improve instruction prefetch, because the probability of a correct branch prediction increases.

For tree models, the branch hint is calculated by comparing left frequency and right frequency during training time. The detailed implementation can be found in ast_native.cc

To summarize, we go over how treelite uses branch hint to improve tree inference at CPU level. It also provides benefits such as a reduced model size. However, this might impact treelite to provide advanced features such as model explainability, e.g. shap would uses the statistics stored in the node to compute feature importance.

Reference: